My Time At The Deep Learning And Reinforcement Learning Summer School (DLRLSS) in Edmonton (Canada)

Tobias Hinz

06 August 2019

Reading time: 17 minutes

Welcome to Edmonton! We're excited to be kicking off the 2019 Deep Learning Reinforcement Learning Summer School with @AmiiThinks at @UAlberta. Started in 2005 by CIFAR fellows, the #DLRLSS is now the premier #AI training event of the year.https://t.co/gNigLgQPQl pic.twitter.com/kwksn0fKyd— CIFAR (@CIFAR_News) July 24, 2019

I was lucky enough to spend the last two weeks (22. July - 3. August) in Edmonton (Canada) at the Deep Learning and Reinforcement Learning Summer School (DLRLSS) 2019.

DLRLSS is one of the oldest summer schools that is purely focused on deep learning and goes back until 2005 when it first started (back then with only about 20-30 attendees). Today it is one of the largest summer schools for DL and RL with about 300 participants (more than 1200 applications) from all over the world. This year’s summer school was hosted by the University of Alberta and Amii (Alberta Machine Intelligence Institute), supported by CIFAR, the Vector Institute, and Mila.

Media Release: World’s Top AI Talent Convenes in Alberta #YEGMedia #YYCMedia #CDNMedia #DLRLSS https://t.co/B5GY7LCBvP— Amii (@AmiiThinks) July 24, 2019

The summer school itself is quite long and intense. In total 9 days of lectures with about 4 lectures per day. Breakfast, lunch, and coffee were provided. I stayed in the Lister Center, one of the student dorms of the University of Alberta, which was about a 15-minute walk from the lecture hall. Overall I really enjoyed spending my time at the university. The two hour lunch breaks, as well as the coffee break in the afternoon provided plenty of opportunities to talk to people with different backgrounds (Mathematics, Physics, Computer Science, Neuro-Science, Statistics, Medicine, …) at different stages of their career (Master or PhD student, Postdoc, Professor, Industry Researcher).

Thanks to @FarrowSandwich for the most delicious food in the most adorable packaging! Enjoy your lunch, #DLRLSS students! pic.twitter.com/9AVb1aXBhA— Amii (@AmiiThinks) July 24, 2019

During most of the days, participants also presented some of their own work through posters. The posters were hung up in the morning and stayed up all day, with the opportunity of looking at them and talking to the persons who did the research. I presented my work (from this year’s ICLR) on the first Thursday. As it so happens Akash presented his work on the Layout VAE at a poster right next to me. His work is on generating scene layouts from image captions, which is the information that my work can subsequently use to generate the resulting images.

#DLRLSS Students, digest some new ideas during lunch - check out the poster sessions in the lobby! pic.twitter.com/8xc7Vw9UZL— Amii (@AmiiThinks) July 25, 2019

On the Tuesday before the DLRLSS started there was an invited talk by David Silver who leads the RL research group at DeepMind and was one of the lead researchers on the AlphaGo algorithm. He talked about the development process of AlphaGo and its subsequent iterations which played even stronger and also learned to play Chess and Shogi.

It’s @VectorInst Night at #DLRLSS! pic.twitter.com/D960EKVwDh— Amii (@AmiiThinks) July 26, 2019

While the classes during the summer school ended at 4:30 pm every day most evenings were still busy with social events. Both the Vector Institute and Mila had a social mixer event which provided the opportunity to indulge in food and drinks and talk with researchers from the respective labs. On the first Friday, we had an AI Community Crawl with four stops (Amii, DeepMind, Borealis AI, and Craft) which gave us the opportunity to visit the local offices of some of the research labs and talk to their researchers.

At the final #DLRLSS social event, the @MILAMontreal Mixer! Thanks so much to Mila staff, CEO Valérie Pisano, Yoshua Bengio, and @LaCiteFrancoYEG for the delightful evening. pic.twitter.com/NZtgQ2acvH— Amii (@AmiiThinks) August 2, 2019

On Monday the movie AlphaGo was shown in one of the local movie theaters, while Tuesday ended with a live improv show called Improbotics . Improbotics combines improvisation with AI in entertaining and unpredictable ways. For example, a machine learning system trained on improvisation games generates new games on the fly (which make more or less, usually less, sense) which the actors try to play. In another instance, a power point presentation was created automatically for a given topic (in our case: ghosts) and one of the actors then presented the power point slides without knowing what the slides would show. Finally, there was a long scene in which one of the actors could only say sentences provided by an NLP model conditioned on the current scene and conversation.

Improbotics is fun! :)) @UAlberta #DLRLSS pic.twitter.com/cWINYeF4bW— tanushri (@tanushri_c) July 31, 2019

The second Wednesday afternoon took place in the downtown convention center. After two more lectures about RL, we had a career panel lead by Martha White with Rich Sutton and Yoshua Bengio. Questions could be asked via Slack in the days leading up to the event. Some of the central outcomes were that it is difficult to be ethical and make (a lot of) money in AI, that work-life balance is important (even though both Rich and Yoshua admitted that their work-life balance was not very good), that curiosity and trying new things off the beaten path are important, and that while DL might be in a bit of a hype bubble at the moment it is most likely here to stay as there are now companies which make real (and a lot of) money by using it.

Super panel on #ArtificialInteligence at #DLRLSS moderated by @white_martha. Rich Sutton & #YoshuaBengio gave #research career advice, talked about #ethics, achieving #WorkLifeBalance, choosing a research topic, impact of #AI on #society etc. Kudos to @AmiiThinks for organising. pic.twitter.com/M4OoOfK9xb— Osmar Zaiane (@ozaiane) August 1, 2019

The evening concluded with a career fair at which the various sponsors had booths and representatives (both researchers and recruiters).

So much great conversation at last night's @ABInnovates Career Mixer in Edmonton, where the world's top young #AI talent has converged for the #DLRLSS. pic.twitter.com/glnRU3txvO— CIFAR (@CIFAR_News) August 1, 2019

Overall, the two weeks were a great experience from me. Many world-renowned researchers such as Yoshua Bengio and Rich Sutton actively participated during the summer school and were available to talk to during the social events in the evenings.

pic.twitter.com/8yHN3s8Jpm— CIFAR (@CIFAR_News) July 25, 2019

Besides that, the participants themselves were a very impressive group with many different backgrounds and all of them interested in learning more about (deep and reinforcement) learning. No lunch/coffee break was the same, but always consisted of talking with people from other fields, and the social events provided opportunities for even more discussions. I, therefore, definitely recommend you apply for next year’s summer school (organized by Mila in Montreal) if you are at all interested in the topics of DL and RL.

10 days. 36 countries. 300 students. We had such a blast with you all at #DLRLSS this year! A special thanks to all attendees, sponsors, and partners including @CIFAR_News. pic.twitter.com/yqMtuv627m— Amii (@AmiiThinks) August 2, 2019

In the next parts of this blog post I will describe some of the highlights of the different lectures, but with the big disclaimer that there were many more very interesting and well-presented lectures then the small subset I present below. The videos of the talks are also supposed to be uploaded sometime in the next weeks so that you can watch all of them in your own time if you are interested.

Deep Learning Lectures

Hugo Larochelle (Google Brain) started the lectures on Wednesday morning with an intro into Deep Learning. It covered basic topics such as forward and backpropagation, loss functions, and gradient descent algorithms. He also presented a quite intuitive explanation of the universal approximation theorem which states that a feed-forward neural network (NN) with a single hidden layer with a finite amount of neurons can approximate any continuous function arbitrarily well.

In the second part, he talked about the optimization landscape of deep neural networks. His main point here was that it seems that the high-dimensional parameter space of modern neural networks can help with optimization, as there is always a way for gradient descent to find a direction in parameter space to further reduce the loss. This relates to the finding that in modern deep neural networks with millions (or even billions) of learnable parameters local optima do not seem to be a problem and it is rather saddle points that slow down training. In fact, consider a dataset that is “labeled” by a randomly initialized NN by feeding it some input data. Training another NN with the same structure and the same number of parameters on this dataset is likely to fail. However, training a larger NN is more likely to work and to reach 100% training accuracy. Essentially, the main takeaway was that the large number of learnable parameters in modern NNs may be a blessing that can make the optimization easier by providing more directions through which to escape from saddle points during training. This hypothesis is further supported by recent work that shows that, while NNs with fewer parameters can reach the same performance as much larger NNs on the same tasks, it is difficult to directly optimize the smaller NNs. The rest of the talk mentioned some more interesting characteristics of deep NNs that are not yet well understood, such as finding “flat” optima in parameters space (which tend to generalize better than “sharp” optima), and the bias/variance trade-off.

Yoshua Bengio from @MILAMontreal presents Recurrent #NeuralNetworks (RNN) at the #DLRLSS (#DeepLearning and #ReinforcementLearning #summerschool) organized by @AmiiThinks and @CIFAR_News @UAlberta pic.twitter.com/hcks9SEBa6— Osmar Zaiane (@ozaiane) July 25, 2019

Yoshua Bengio (Mila) gave two talks, one about recurrent neural networks (RNNs) and one about future research directions and “What’s next?” in AI and deep learning. In his talk about RNNs he talked about the different use cases of RNNs (like sequence to vector, vector to sequence, sequence to sequence) and what they can be used for (e.g. time series forecasting, machine translation, and language modeling). A big part of the talk was about why learning long-term dependencies with gradient descent is difficult. This relates to the challenge of training recurrent models in a way that they can learn and understand how events from the (far) past can influence current outputs/predictions. Essentially this comes down to the well-known problem of vanishing or exploding gradients (especially for long input sequences) in RNNs. This is because of the fact that in an RNN the gradient is a product of multiple Jacobian matrices (one Jacobian for each forward computation). As a result, if the Eigenvalues of the Jacobians is greater than one this leads to exploding gradients, while Eigenvalues of smaller than one lead to vanishing gradients (if some Eigenvalues are greater than one and some are smaller than one this leads to problems with exponentially growing variance in the gradients). He then presented multiple ways of dealing with these problems, e.g. LSTMs, gradient clipping, skip connections, and multiple hierarchical time scales. The talk concluded with an overview of current approaches to attention and how to use them to extend NNs with an external memory to explicitly deal with information from far back in the past by storing and retrieving it from the memory.

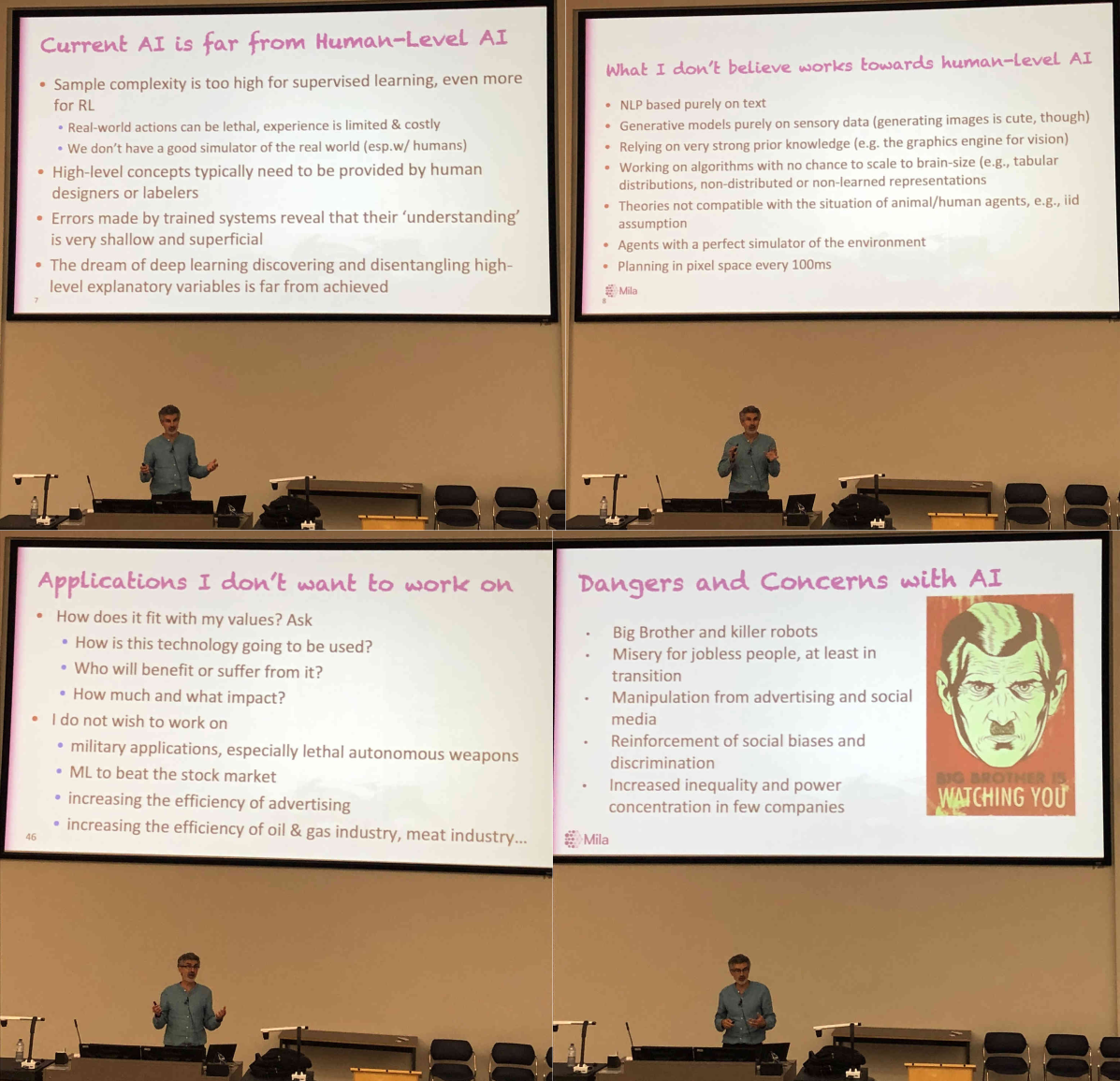

In his second talk “What’s next?” he talked about the current status of AI (Deep Learning) and what he thinks is important for the future. Some of his key beliefs about what will not lead to human-level AI are:

- NLP based purely on text (as it is predominantly done at the moment) - instead a world model and language must be learned jointly, i.e. grounded language understanding,

- generative models based purely on sensory data, e.g. only generating images in pixel space - instead, models need to have a better, more abstract understanding of the world and how it works, i.e. generative models in latent space,

- working on algorithms that do not scale to brain-size - e.g. tabular Reinforcement Learning as opposed to Deep Reinforcement Learning,

- relying on very strong prior knowledge and perfect simulators of the world,

- planning in pixel space - instead, planning should take space at a higher level of abstraction, i.e. in latent space and not in pixel space.

In today’s final session of #DLRLSS, @MILAMontreal’s Yoshua Bengio asks: What’s Next? #AI #ML pic.twitter.com/cvmJI4VTkH— Amii (@AmiiThinks) July 27, 2019

Essentially, most of his talk was about what needs to be done and improved (in his opinion) to get closer to human-level intelligence and some concrete approaches to get closer to the goal of human-level AI (e.g. Meta- and Transfer-Learning, self-supervised learning, grounded language learning, disentangled representations). Finally, he presented an outlook of the future, what he does not want to work on (think: killer robots, agents for the stock market, increasing the efficiency of advertising/the oil and gas industry/…) and what the future problems might be that we have to deal with (think: surveillance state, manipulation in social media, increased inequality, …). Overall it was quite an inspiring talk about many different topics and (high-level) insights into potentially important future directions of the field of deep learning.

Ke Li gave an interesting talk about generative models and how they work. I especially liked his visual introduction to the (subtle) characteristics of the Kullback-Leibler (KL) divergence and the differences in optimizing for the forward (mode covering) or backward (mode dropping) KL divergence and its connection to Maximum Likelihood Estimation (which is equivalent to minimizing the KL divergence from the model distribution to the data distribution). Using the KL divergence he explains some of the characteristics and challenges of GANs and VAEs. The VAE algorithm directly maximizes a lower bound of the log-likelihood (ELBO). However, the gap between the ELBO and the true likelihood (which we cannot directly optimize since it is intractable) consists of the reverse (remember: mode dropping) KL divergence between the approximate and the true latent distribution conditioned on the input. This can lead to a phenomenon called posterior collapse, in which the decoder (if powerful enough) effectively ignores the latent representation.

After a morning of Hands-On #DL, @ucberkeley’s Ke Li talks GANs! #AI #DLRLSS pic.twitter.com/OtENxDmUxw— Amii (@AmiiThinks) July 27, 2019

The GAN algorithm, on the other hand, explicitly minimizes the reverse KL divergence, which leads to the known problem of mode dropping, i.e. of only generating data belonging to a subset of the original data distribution instead of generating samples that cover the full data distribution. While optimizing the forward KL divergence would lead to better recall (think: how many real data examples can be generated) in generative models, optimizing the reverse KL divergence leads to higher precision (think: how many generated samples look real). This is one of the reasons why images generated by GANs tend to have high quality and why GANs often show a mode dropping behavior, i.e. are only able to generate samples from a subset of the data distribution.

Finally, he introduced some of his own work, called Implicit Maximum Likelihood Estimation (IMLE) which tries to combine the characteristics of VAEs (mode coverage) and GANs (does not require evaluation of the likelihood during training). Intuitively this approach tries to make sure that generated data points lie close to real data points in the data distribution (high precision), while also making sure that each real data point (from the training set) has generated samples close by (high recall). He showed some promising results on various tasks such as image super-resolution and image synthesis from scene layouts and I am curious to see which further developments we will see with this approach.

Blake Richards gave a very interesting talk about biological deep learning, i.e. the similarities between techniques in the deep learning community and their (potential) counterpart in the brain. The motivation for this is that deep learning models are a better fit to neocortical representations than models developed by neuroscientists which could suggest that our neocortex does something similar to deep learning. Now, (almost) all current deep NNs (certainly the most successful ones) are trained with some form of the backpropagation algorithm for which we usually need a loss function, the transpose of the downstream weight matrix, a derivative of the activation function, and separate forward and backward passes.

Amazing lecture by neuroscientist Blake Richards.

Our brains do not do backprop specifically, but that doesn't mean they don't do deep learning (end-to-end) #DLRLSS pic.twitter.com/0CGWywS6DR— Michael Azmy (@MichaelAzmy) July 27, 2019

These things are considered to be somewhat problematic in a biological brain, but current results indicate that it might indeed be possible (!) for the brain to do some form of backpropagation. Blake then proceeded to go through each of the four individual demands for backpropagation and shows approaches and explanations of how a biological brain could actually implement and them. This could indicate (!) that the biological brain might, in fact, implement some form of “deep learning”, i.e. updating its parameters (synapses) in an end-to-end manner based on some error term and forward and backward passes. One big caveat is that all of the current science on this deals only with feed-forward networks but can still not at all explain what we know as backpropagation-through-time, i.e. RNNs. Nonetheless, this was a very interesting talk that connected many areas of “deep” and “biological” learning.

Reinforcement Learning Lectures

Csaba Szepesvári gave a nice introduction to bandits, the “small brother of reinforcement learning”. A bandit can be thought of as a “game” in which you get to choose one of N actions (“arms”) per round and get a (stochastic) reward for picking the particular arm. In the beginning, you don’t know anything about the rewards choosing one of the different arms gives you, but the more often you pick a certain arm, the surer you become about the (average) reward you get from the given arm. The overall goal is to maximize your total reward (possibly limited by the total number of actions you are allowed to take).

The challenge is to (similar as in RL) trade-off between exploration (trying each arm multiple times to get a good estimate of its reward) and exploitation (choosing the arm that looks like it gives you the most reward). One example of this is drug testing, where you might have different drugs to treat a given condition and you don’t know yet which drug works the best. Obviously you need to try each drug multiple times (on different subjects?) to get a decent estimate of how good it is, but, on the other hand, you want to know which drugs is the best one as fast as possible so that more subjects can benefit from it.

An important concept in bandits is the “regret”, which is the total (expected) reward you would get if you had taken the optimal action from the start. Evaluating based on the regret makes it easier to compare different bandits since it is invariant to shifting distributions. Algorithms for finding the best strategy, i.e. which bandit to pick for maximal reward, are similar to RL algorithms such as e.g. ε-Greedy, and the rest of the lecture dealt with different approaches for finding better algorithms, as well as how to choose and design them.

Day five of #DLRLSS continues with @UAlberta's/Amii's @CsabaSzepesvari's session on Bandits! pic.twitter.com/t8RQ3kRgMk— Amii (@AmiiThinks) July 29, 2019

Martha White gave a very well prepared lecture about model-based reinforcement learning. Many current RL approaches do not use model-based RL but instead only learn from the data and the environment directly. This can make them very sample inefficient since they are not able to “simulate” new trajectories or reason about possible consequences of actions they take. A different (potentially better) approach is to also learn a model of the world the agent is acting in. Through this, the agent can become more sample efficient. Probably the easiest model-based RL algorithm is to keep a buffer of past state, action, reward, next state tuples and to use them to update the algorithms policy even while obtaining new data by taking new actions. This is called “experience replay” and is widely used in the RL community. Martha then continues to talk about Dyna which learns a model of the world while interacting with it. The key idea is to use RL updates (e.g. Q-learning) on the simulated experience from the model as if these simulated experiences come from the real world. The two key challenges here are about what kind of model to learn (a simple form would be a buffer as explained above), and the search-control algorithm, i.e. how to choose which experiences from the model should be used for learning (could be randomly sampled from the model or some more advanced algorithm).

Martha White of Amii/@UAlberta speaks about Model-Based RL at #DLRLSS! pic.twitter.com/iFATJXnryR— Amii (@AmiiThinks) July 30, 2019

Dale Schuurmans talked about some underlying foundations for RL. It wasn’t, in fact, very specific to RL itself, but more a general view of some of the underlying concepts of optimization. Again, we are in an RL setting in that we take an action at a given time step and obtain some reward. One of the key challenges in RL is that we only get the reward for the taken action, but do not know which reward we would have gotten for any of the actions we didn’t take. The most straight forward thing to do in this case would be to optimize the thing we care bout, i.e. the expected reward. However, as Dale points out, nobody actually does this, since this loss has plateaus everywhere and it is nearly impossible to reach a global optimum. This is similar to the approach in supervised training, where nobody actually optimizes what we care about (the expected accuracy), but everyone optimizes the maximum log-likelihood for similar reasons. In fact, the optimizing the maximum likelihood in supervised training usually gives better results on accuracy than optimizing for the expected accuracy directly. Dale then goes on to show better approaches to optimize what we care for (expected reward) by optimizing a surrogate loss, e.g. the entropy regularized expected reward. The main point here (and it also applies to classic supervised learning) is that we do not optimize directly what we care about, but rather optimize a surrogate which has better characteristics (e.g. better derivatives, convexity, calibration, etc). He continues to apply this to the specific setting of RL where we have missing data points (since we only get feedback about the action we took, but not the other actions) and possible ways to deal with this.

Dale Schuurmans from @AmiiThinks @UAlberta & @GoogleAI presents #Optimization in #ReinforcementLearning at #DLRLSS. He says the typical Environment-Agent figure is "dangerous" because it makes RL look simple but it masks many complex subproblems. #MachineLearning #AI #Edmonton pic.twitter.com/wXlJ97wVdW— Osmar Zaiane (@ozaiane) July 30, 2019

While James Wright’s talk was supposedly about Multi-Agent RL he started his presentation with a disclaimer that “multi-agent systems aren’t solutions, they are problems”. Most of his talk was to highlight why multi-agent systems don’t work yet and why they are an important challenge to tackle. However, a lot of his lecture was spent on game theory and how it could apply in multi-agent systems. The parts about game theory are quite general and independent of RL, so if you are interested in game theory I definitely recommend to check out his talk. He defines a multi-agent system as a system that contains multiple agents (duh) each of which has distinct goals, priorities, and rewards. This explicitly means that the agents do not have the same goal (i.e. they are not necessarily cooperative). This is where game theory enters, which James calls “multi-agent mathematics”, i.e. the “mathematical study of interaction between multiple rational, self-interested agents”. He then shows several (fun) game-theoretic games (e.g. the Prisoner’s and the Traveler’s dilemma) and how they relate to the Nash equilibrium and Pareto optimality. The important finding is that individually rational behavior does not always lead to globally optimal behavior and that it is even possible that individual optimization can lead to the worst possible global outcome (e.g. the only Nash equilibrium in the Prisoner’s dilemma is also the only outcome that is Pareto dominated). My final takeaway from the lecture was that while game theory is cool and has important applications it is not enough to reason precisely about multi-agent interactions, especially in the real world where it is often difficult to calculate the Nash equilibrium and where agents are not perfectly rational.

James Wright from Amii/@UAlberta begins the afternoon of #DLRLSS today at @yegconference! pic.twitter.com/zU8Q8irPld— Amii (@AmiiThinks) July 31, 2019

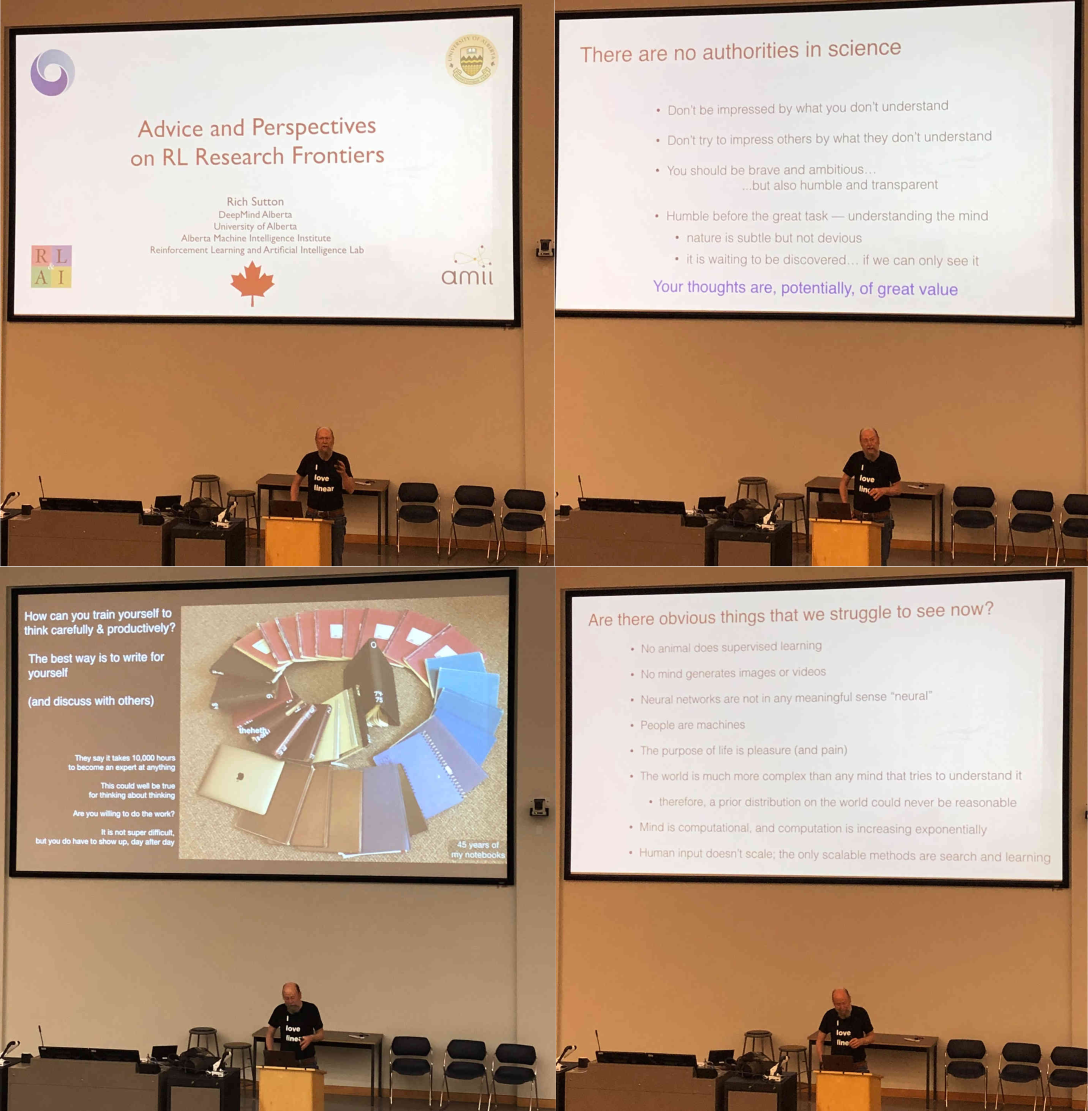

The DLRLSS concluded with a talk by Rich Sutton about advice for (young) researchers and research frontiers in RL. It was a very opinionated and entertaining talk with many things to agree and disagree. He started out with stating that there are no authorities in science, that you shouldn’t be impressed by what you don’t understand, and that you shouldn’t try to impress others with what they don’t understand. He also suggests that you keep a notebook with you and write about one page of personal notes every day to practice your writing and also to keep your thoughts organized.

Finally, he dreams of a world in the future where we have human-level AI that cooperates and works with humans, but in which humans are not more “valuable” than AIs. His view for the future sounded somewhat dystopian and he thinks there might be a decent chance of human-level AI as early as 2030-2040. I am a bit more skeptical in this respect, but I guess only time will tell.